Identifying and fixing faulty RAM on my homelab server

March 30, 2025 by Andrew Dawes

What happened…

Some time last year, I started noticing a concerning trend.

Occasionally, one of the servers in my home lab would “lock up”. All the services on the host went down. SSH, ping, and even local console access were non-responsive. When I opened a local console, I could see logs were still being printed to the screen, but I could not type or execute any commands – it was as if my keyboard were disconnected.

A quick reboot always temporarily solved the problem, so for a while, I just kept rebooting as needed.

However, I recently found the time (and wits) to do a deeper dive – and what I found was surprising.

I had assumed it was likely a software issue – perhaps the operating system (Ubuntu) or the hypervisor I am running (LXD).

Or, maybe it was BIOS configuration – I hadn’t customized much about my B450 Tomahawk Max II motherboard’s BIOS settings, but perhaps additional customization was needed?

At worst, I had assumed it might be incompatible hardware – since I had built the server myself a few years ago, there was a chance that my choice of components was founded on faulty assumptions.

When I did my deep-dive, I did first reboot the machine.

Once things were up and running again, I logged in at a local console and used the journalctl command to view all logs from the previous boot:

journalctl -b -1 | lessAs I searched through the logs, I noticed that PackageKit had attempted to run overnight, but ran into a kernel error:

Mar 21 01:06:51 localhost systemd[1]: Starting packagekit.service - PackageKit Daemon...

Mar 21 01:06:51 localhost PackageKit[505046]: daemon start

Mar 21 01:06:51 localhost dbus-daemon[1590]: [system] Successfully activated service 'org.freedesktop.PackageKit'

Mar 21 01:06:51 localhost systemd[1]: Started packagekit.service - PackageKit Daemon.

Mar 21 01:06:56 localhost kernel: general protection fault, probably for non-canonical address 0x27a10e80c95b3098: 0000 [#1] PREEMPT SMP NOPTI

Mar 21 01:06:56 localhost kernel: CPU: 13 PID: 505036 Comm: apt-get Tainted: P O 6.8.0-55-generic #57-Ubuntu

Mar 21 01:06:56 localhost kernel: Hardware name: Micro-Star International Co., Ltd MS-7C02/B450 TOMAHAWK MAX II (MS-7C02), BIOS H.C0 10/14/2023This line in particular stood out to me because it indicated there was a memory fault:

Mar 21 01:06:56 localhost kernel: general protection fault, probably for non-canonical address 0x27a10e80c95b3098: 0000 [#1] PREEMPT SMP NOPTII did some research, and several articles I read recommended isolating the problem to determine if it was hardware or software-related.

Specifically, the articles suggested using a tool called memtest to check for faulty RAM.

I installed memtest:

sudo apt update && sudo apt install memtest86+Upon installation, apt printed a log message indicating that memtest had modified GRUB.

This makes it possible to run memtest on boot – instead of running Ubuntu (or your choice of OS), you can actually boot into memtest.

So I rebooted!

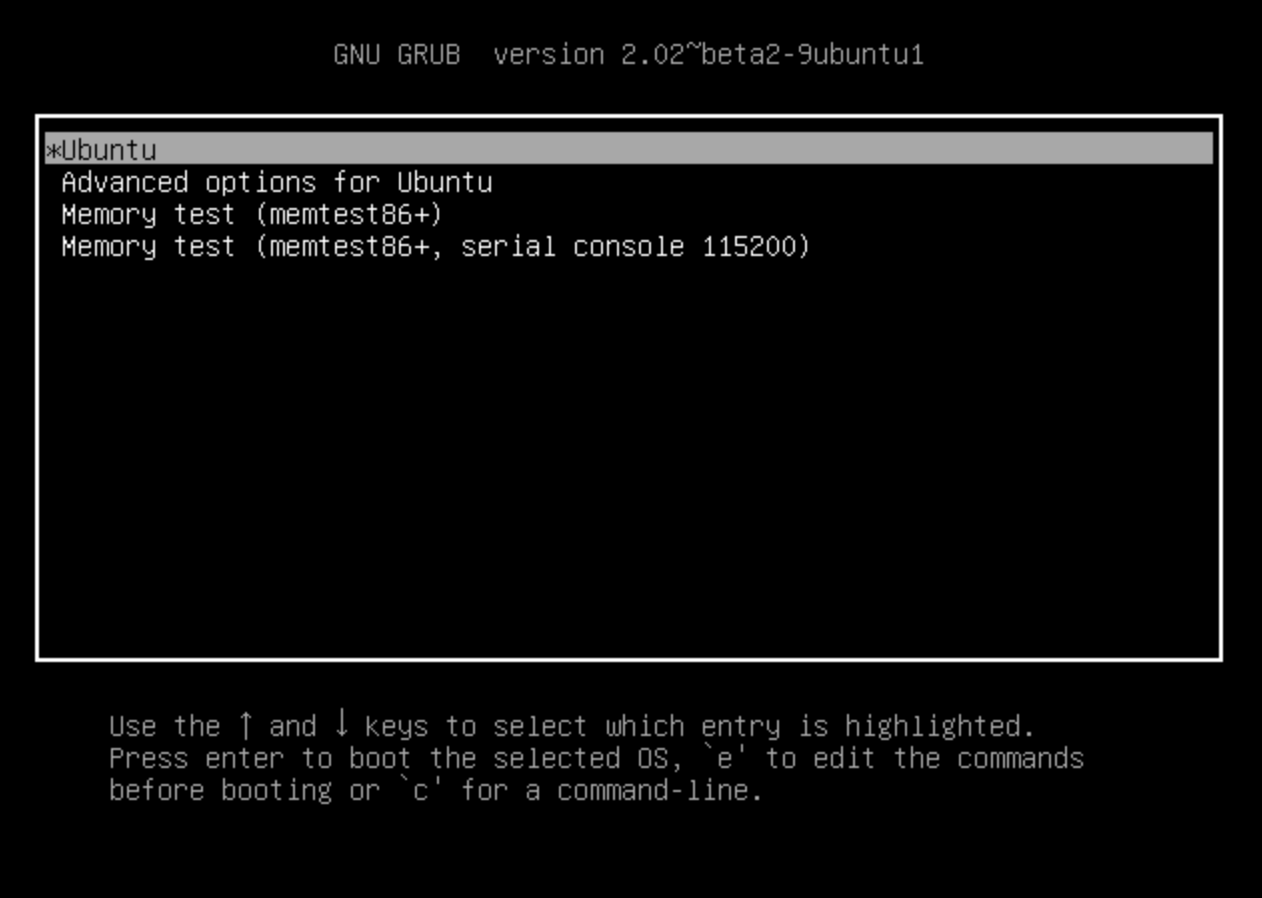

At my bootloader menu, I chose Memory test (memtest86+):

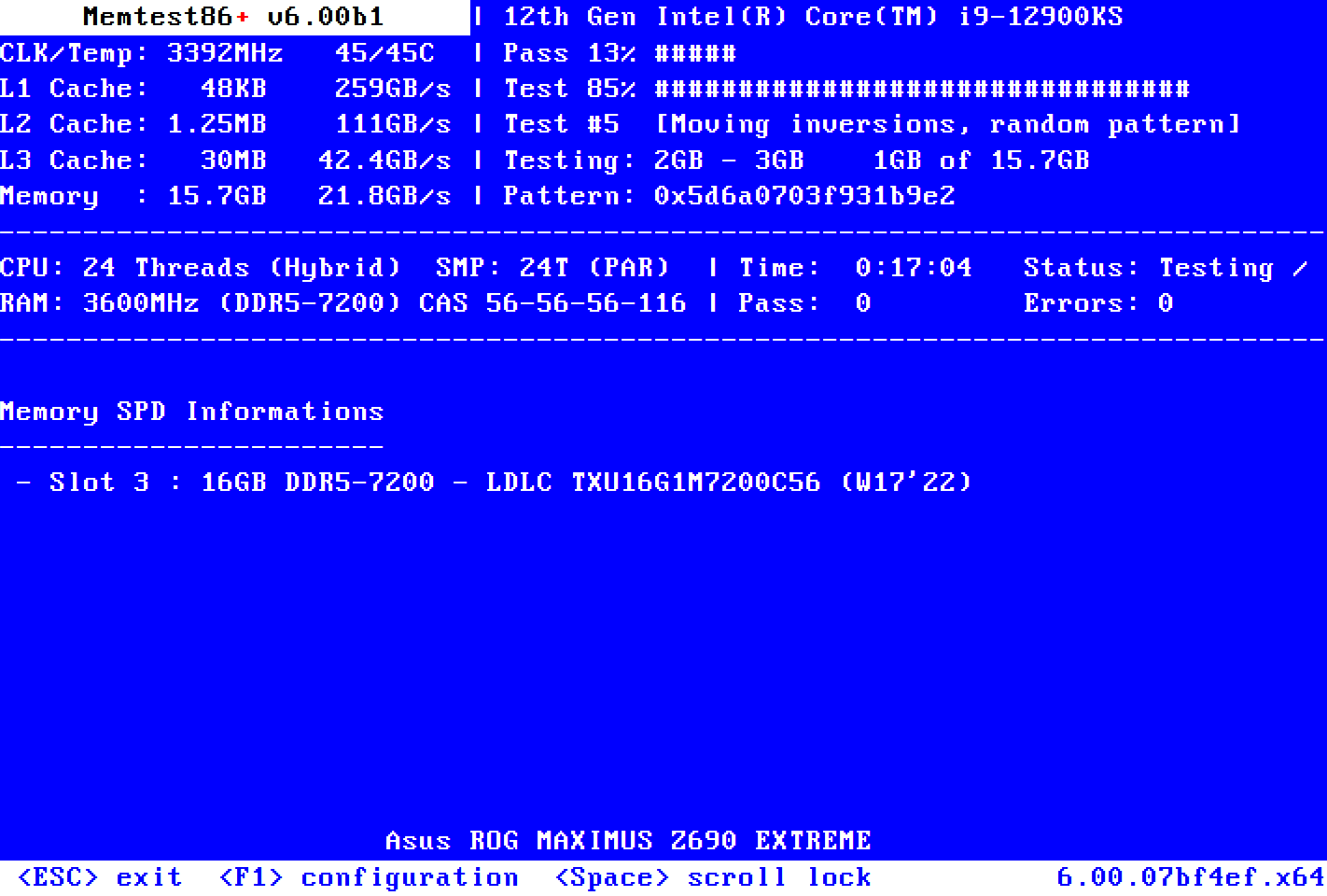

And memtest started running…

…and memtest failed on the first pass!

It was then I knew I likely had a faulty stick of RAM.

It was a terrible feeling (“Did I purchase a faulty stick? Did they sell it to me that way?” and “I can’t believe I spent so much time rebooting and waiting/worrying!”).

But at the same time, I also felt very relieved (“I finally know what the problem is!” and “I can fix this!”).

The next step was to identify which stick of RAM was faulty. From what I was seeing online, this would require a process of experimentation.

What I did to fix it…

I took advice from several other articles and forum posts, and labeled each stick of RAM with a piece of tape and a permanent marker.

I came up with fun and creative names for each stick of RAM so that I could easily keep track of which was which. I named mine after popular Marvel Avengers – “Captain America” and “Iron Man”.

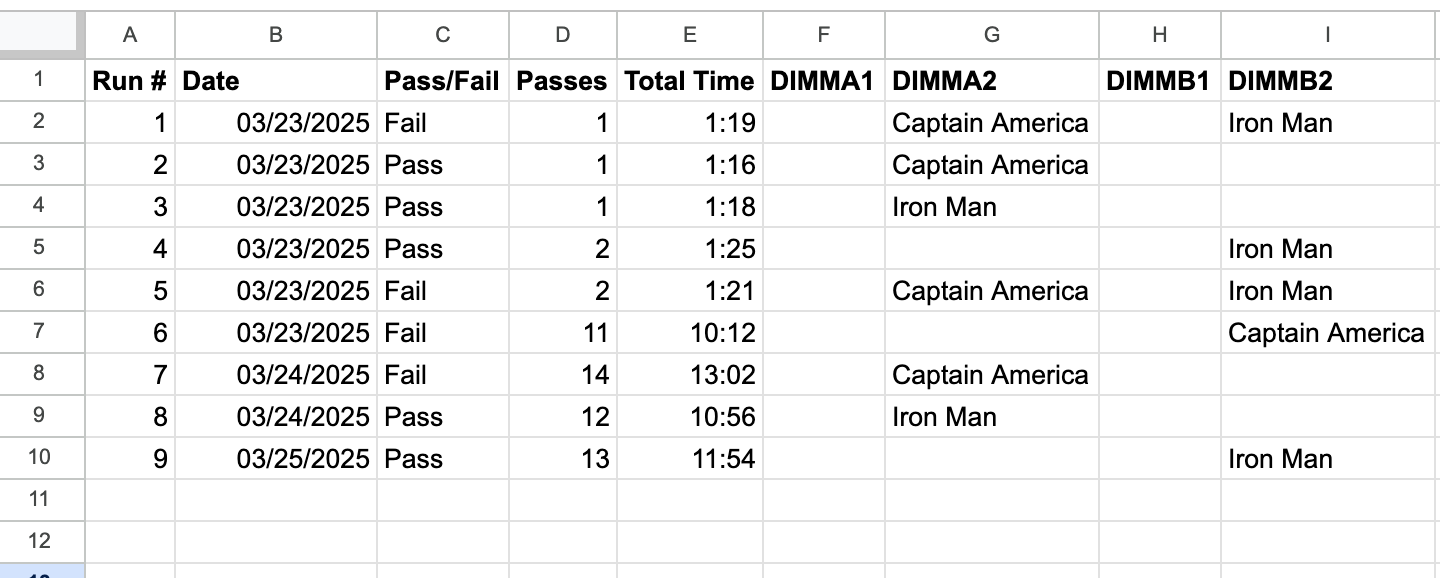

I also created a Google Sheet like this one to record the results of my tests:

…after 9 tests, the results were clear: Captain America had betrayed the Avengers and was conspiring with Hydra to bring down my server! Only Iron Man could be trusted to reliably store bytes.

I put both Captain America and Iron Man in a ziplock bag. Since memory modules come in pairs, I decided I would just purchase a brand new pair and if 1 of those ever fails, swap Iron Man back into the action.

My next pair of “Avengers” arrived earlier this week. Needless to say, I did some extensive testing with memtest after installing those new modules to help me gain some peace of mind about my server’s ability to stay up and running (and as a way of doing some additional quality control for the manufacturer!).

A word to the wise: memtest runs indefinitely, and as you can see from the spreadsheet of results above, this can lead to false negatives if you don’t allow enough time/passes for each test.

Put differently: At first, I didn’t realize that it sometimes takes several passes to identify a memory fault – so I ended tests prematurely and recorded a Pass when if I had waited a little longer, I would have observed a Fail.

So, as a recommendation, for each test iteration, you may want to let memtest run for at least several hours before ending that test and recording your results.